Toxicity Ghettoing: an Amazon patent could create a gaming class system

From quitters to shouty men, toxic players deserve a special place in hell. But is this the right path to send them there?

How do you choose to vent? Scream at the top of your lungs? Punch a pillow? Queue up your favourite emo playlist and bemoan the day away? Or maybe you are one of those special people that takes it out on others?

Fans of online multiplayer games know the frustration of being matched with toxic players. I remember well hundreds of hours invested in Modern Warfare 2, with an extremely active community and great online deathmatches. It was there, on the blood soaked streets of Favela, that I first learned the shocking truth. That my mother was a prostitute. The revelation came by way of a helpful 13 year old, who I knew only as “xXXBongzandNoScopez69XXx”.

Thanks pal.

Since then, I’ve been called every name under the sun while indulging in my favourite online hobby. Whether raiding the Ascalonian catacombs in Guild Wars 2, defusing the bomb at B in CS:GO or just trying to chill out and play some Hearthstone. (True, you don’t have to talk to other players unless you want to, but you might be surprised how often an attempt to be social in Hearthstone results in a barrage of colourful language from someone who is less than thrilled with losing to my face hunter deck on turn four.)

As long as people play together online, anonymously from behind a screen and headset, there will always be an unfortunate minority who feel it necessary to be toxic.

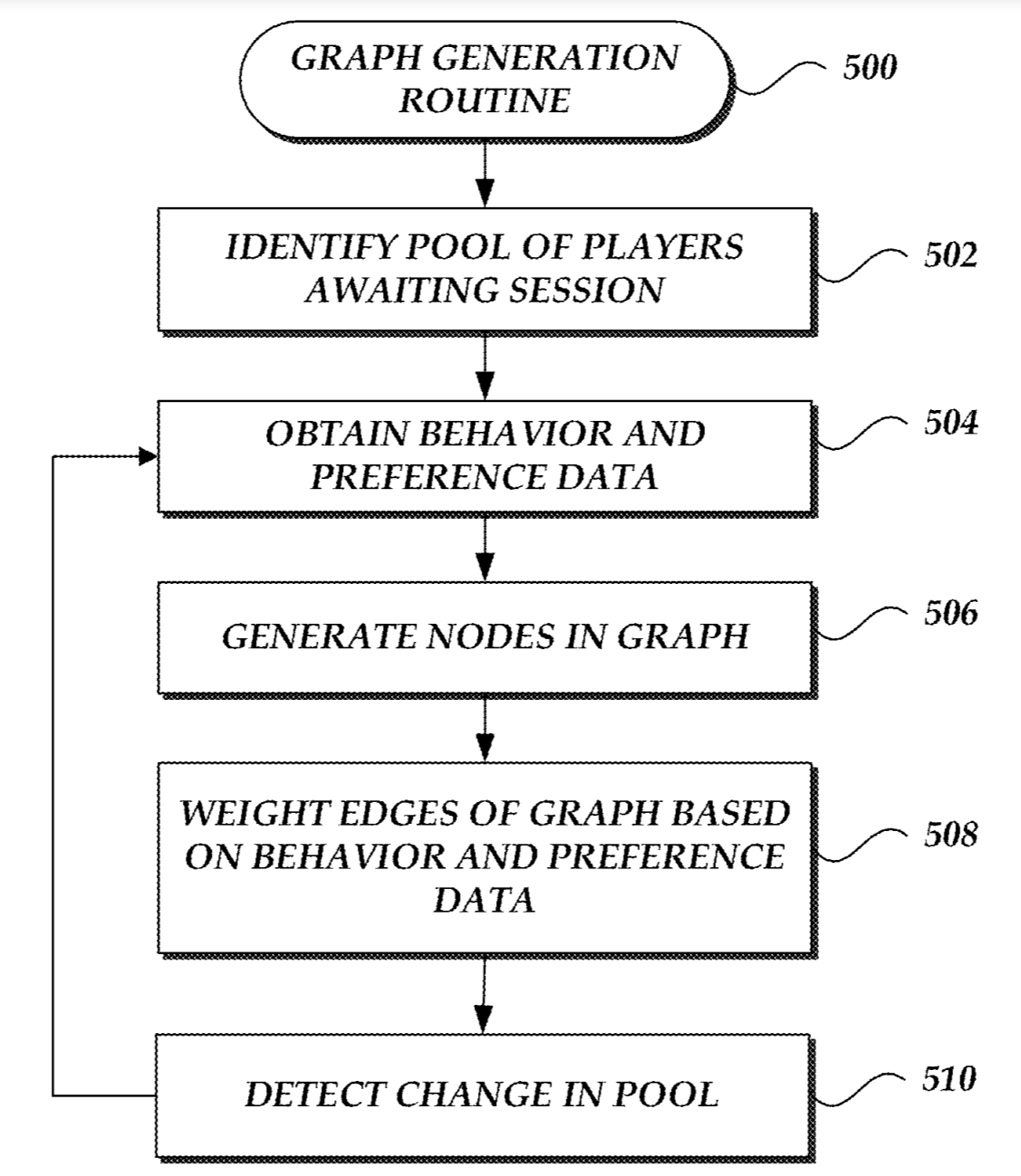

A new Amazon patent, however, could make headway towards changing the online gaming toxicity landscape. The company is proposing a system to refine matchmaking based on players’ language and behaviour towards others.

While developers typically choose to separate players based on skill level, Amazon acknowledges that “such systems naively assume that skill is the primary or only factor to players' enjoyment.”

“Players' enjoyment may depend heavily based on behaviours of other users with which they are paired, such as the proclivity of other players to use profanity or engage in other undesirable behaviours.”

The details of the patent, filed in 2017 but approved in October this year, can be found here.

Amazon’s plan is for a system that would attempt to more intelligently match players with like-minded opponents, “based on analysis of in-game actions”. A player’s behaviour and preferences in-game would be “represented as a node within a graph… connected via an edge weighted according to a compatibility of preferences.”

While we’ve seen developers take steps to punish players for abusive behaviour before, the patent’s language admits toxicity is a broad concept and difficult to define. The subtleties inherent in players' enjoyment of multiplayer games make it difficult to categorise people as either good or bad. For instance, players who use colourful language in group chats might be offensive to a parent playing with their child, but perfectly acceptable to that player's close friends.

Meanwhile, another style of toxicity are the so-called rage quitters, who leave an online match as soon as things look dire, potentially abandoning hard working teammates (this is never ok, by the way.).

This new system would attempt to refine the labelling of toxic behaviour further, by grouping players based on the specific types of toxicity they exhibit, and allowing them some freedom to filter their matches based on personal preferences (For example, you might be ok with being matched with potty mouths, but want to avoid rage quitters at all cost).

Within these subsections there is even more room for interpretation. Is a player who leaves matches often doing so because they are frustrated, or simply because they live in a country with spotty internet connection? (How’s your NBN this week, by the way?). If they are leaving because they are frustrated, is it the players' fault, or because other players are willfully making the game unfun for them? There might also be some inherent problem with a specific level or mechanic on the development side. There are a lot of variables at play here.

At its core, the potential positives of players being able to avoid abusive language and actions when playing online seems like a clear win. But it’s worth considering some wider implications here.

The use of sophisticated AI and software to monitor users of online products has been of growing concern in the last few years, with all the tech giants at some point accused of shady information collection and data selling practices.

Unless this patent was implemented using a recommendation system, which can often be open to exploitation by the bad users you’re trying to send to the toxicity ghetto, this potential new technology may also resort to listening in on players to track their language and behaviour.

That leads to bigger questions around data management and integrity. Could this data be sold? Or used for more targeted advertising? It’s not hard to envision a future where a few casual Aussie c-bombs in your Fortnite match could see you getting ads for Four ‘N Twenty pies in the pre-game lobby.

The potential for software driven player segregation also raises questions about the way we are classed in online activities.

Is it fair to simply clump so-called toxic players together without attempting to understand the mechanisms that lead people to exhibit negative behaviour in the first place?

The patent contains language that suggests the simplest solution to the problem is to simply “isolate all 'toxic' players into a separate player pool, such that one toxic player is paired only with other toxic players.”

There are multiple examples of other video game companies employing toxicity curbing measures in the past, in games such as Rainbow Six Siege and Fall Guys. However these systems can sometimes get very heavy handed, like instant bans, or that they act as a black box, with players having little to no knowledge of when they are punished.

However there is some historical precedent for suggesting that by placing a blanket over all players labelled toxic and forcing them to play together, it perpetuates a cycle that will not allow them to rectify their online credentials and return to a more reasonable state of play.

One popular case of this is with Nintendo’s Super Smash Bros. Dubbed by the community as ‘’For Glory Hell’’, toxic players were matched with each other based on a recommendation system with no notification or warnings that you were being downgraded in an invisible player ranking. Players labelled toxic would gradually find themselves only being matched with other griefers, leaving them little recourse but to keep reporting each other and remain in the proverbial naughty corner forever.

However things play out, don’t expect this prospective technology to change the gaming landscape any time soon. As a publisher of games, Amazon has not exactly been pumping out hits since its inception over 8 years ago.

After shutting down it’s first online game, Crucible, after only 5 months, the most likely implementation of this technology by the company would be in Athlon Games’ Lord of the Rings MMO, with whom Amazon is partnering, but this title has no release date and only sparse details about its development so far.

Until refinements in technology come to save the day once again, the best advice we can give you is to just try to play nice with each other, ok?

Byteside Newsletter

Join the newsletter to receive the latest updates in your inbox.